blakestacey

40 minutes ago

•

100%

blakestacey

40 minutes ago

•

100%

For some reason, the news of Red Lobster's bankruptcy seems like a long time ago. I would have sworn that I read this story about it before the solar eclipse.

Of course, the actual reasons Red Lobster is circling the drain are more complicated than a runaway shrimp promotion. Business Insider’s Emily Stewart explained the long pattern of bad financial decisions that spelled doom for the restaurant—the worst of all being the divestment of Red Lobster’s property holdings in order to rent them back on punitive leases, adding massive overhead. (As Ray Kroc knows, you’re in the real estate business!) But after talking to many Red Lobster employees over the past month—some of whom were laid off without any notice last week—what I can say with confidence is that the Endless Shrimp deal was hell on earth for the servers, cooks, and bussers who’ve been keeping Red Lobster afloat. They told me the deal was a fitting capstone to an iconic if deeply mediocre chain that’s been drifting out to sea for some time. [...] “You had groups coming in expecting to feed their whole family with one order of endless shrimp,” Josie said. “I would get screamed at.” She already had her share of Cheddar Bay Biscuit battle stories, but the shrimp was something else: “It tops any customer service experience I’ve had. Some people are just a different type of stupid, and they all wander into Red Lobster.”

blakestacey

1 hour ago

•

100%

blakestacey

1 hour ago

•

100%

Yeah, Krugman appearing on the roster surprised me too. While I haven't pored over everything he's blogged and microblogged, he hasn't sent up red flags that I recall. E.g., here he is in 2009:

Oh, Kay. Greg Mankiw looks at a graph showing that children of high-income families do better on tests, and suggests that it’s largely about inherited talent: smart people make lots of money, and also have smart kids.

But, you know, there’s lots of evidence that there’s more to it than that. For example: students with low test scores from high-income families are slightly more likely to finish college than students with high test scores from low-income families.

It’s comforting to think that we live in a meritocracy. But we don’t.

There are many negative things you can say about Paul Ryan, chairman of the House Budget Committee and the G.O.P.’s de facto intellectual leader. But you have to admit that he’s a very articulate guy, an expert at sounding as if he knows what he’s talking about.

So it’s comical, in a way, to see [Paul] Ryan trying to explain away some recent remarks in which he attributed persistent poverty to a “culture, in our inner cities in particular, of men not working and just generations of men not even thinking about working.” He was, he says, simply being “inarticulate.” How could anyone suggest that it was a racial dog-whistle? Why, he even cited the work of serious scholars — people like Charles Murray, most famous for arguing that blacks are genetically inferior to whites. Oh, wait.

I suppose it's possible that he was invited to an e-mail list in the late '90s and never bothered to unsubscribe, or something like that.

blakestacey

1 day ago

•

100%

blakestacey

1 day ago

•

100%

They did come up in the Tech Bros Have Built a Cult Around AI episode.

blakestacey

2 days ago

•

100%

blakestacey

2 days ago

•

100%

From the documentation:

While reasoning tokens are not visible via the API, they still occupy space in the model's context window and are billed as output tokens.

Huh.

Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret. Any awful.systems sub may be subsneered in this subthread, techtakes or no. If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high. > The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be) > > Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them. [Last week's thread](https://awful.systems/post/2334840) (Semi-obligatory thanks to @dgerard for [starting this](https://awful.systems/post/1162442))

blakestacey

4 days ago

•

100%

blakestacey

4 days ago

•

100%

The New Yorker gamely tries to find some merit, any at all in the writings of Dimes Square darling Honor Levy. For example:

In the story “Little Lock,” which portrays the emotional toll of having to always make these calculations, the narrator introduces herself as a “brat” and confesses that she can’t resist spilling her secrets, which she defines as “my most shameful thoughts,” and also as “sacred and special.”

I'm really scraping the bottom of the barrel for extremely online ways to express the dull thud of banality here. "So profound, very wow"? "You mean it's all shit? —Always has been."

She mixes provocation with needy propitiation

Right-click thesaurus to the rescue!

But the narrator’s shameful thoughts, which are supposed to set her apart, feel painfully ordinary. The story, like many of Levy’s stories, is too hermetically sealed in its own self-absorption to understand when it is expressing a universal experience. Elsewhere, the book’s solipsism renders it unintelligible, overly delighted by the music of its own style—the drama of its own specialness—and unable to provide needed context.

So, it's bad. Are you incapable of admitting when something is just bad?

blakestacey

4 days ago

•

100%

blakestacey

4 days ago

•

100%

I often use prompts

Well, there's your problem

blakestacey

4 days ago

•

100%

blakestacey

4 days ago

•

100%

and hot young singles in your area have a bridge in Brooklyn to sell

on the blockchain

blakestacey

4 days ago

•

100%

blakestacey

4 days ago

•

100%

So many techbros have decided to scrape the fediverse that they all blur together now... I was able to dig up this:

"I hear I’m supposed to experiment with tech not people, and must not use data for unintended purposes without explicit consent. That all sounds great. But what does it mean?" He whined.

blakestacey

4 days ago

•

100%

blakestacey

4 days ago

•

100%

also discussed over here

blakestacey

4 days ago

•

100%

blakestacey

4 days ago

•

100%

When you don’t have anything new, use brute force. Just as GPT-4 was eight instances of GPT-3 in a trenchcoat, o1 is GPT-4o, but running each query multiple times and evaluating the results. o1 even says “Thought for [number] seconds” so you can be impressed how hard it’s “thinking.”.

This “thinking” costs money. o1 increases accuracy by taking much longer for everything, so it costs developers three to four times as much per token as GPT-4o.

Because the industry wasn't doing enough climate damage already.... Let's quadruple the carbon we shit into the air!

Time for some warm-and-fuzzies! What happy memories do you have from your early days of getting into computers/programming, whenever those early days happened to be? When I was in middle school, I read an article in *Discover* Magazine about "artificial life" — computer simulations of biological systems. This sent me off on the path of trying to make a simulation of bugs that ran around and ate each other. My tool of choice was PowerBASIC, which was like QBasic except that it could compile to .EXE files. I decided there would be animals that could move, and plants that could also move. To implement a rule like "when the animal is near the plant, it will chase the plant," I needed to compute distances between points given their *x*- and *y*-coordinates. I knew the Pythagorean theorem, and I realized that the line between the plant and the animal is the hypotenuse of a right triangle. Tada: I had invented the distance formula!

blakestacey

1 week ago

•

100%

blakestacey

1 week ago

•

100%

I have to admit that I wasn't expecting LinkedIn to become a wretched hive of "quantum" bullshit, but hey, here we are.

Tangentially: Schrödinger is a one-man argument for not naming ideas after people.

blakestacey

1 week ago

•

100%

blakestacey

1 week ago

•

100%

(smashes imaginary intercom button) "Who is this 'some guy'? Find him and find out what he knows!!"

blakestacey

1 week ago

•

100%

blakestacey

1 week ago

•

100%

Happy belated birthday!

blakestacey

1 week ago

•

100%

blakestacey

1 week ago

•

100%

Buh bye now.

blakestacey

1 week ago

•

100%

blakestacey

1 week ago

•

100%

Elon Musk in the replies:

Have you read Asimov’s Foundation books?

They pose an interesting question: if you knew a dark age was coming, what actions would you take to preserve knowledge and minimize the length of the dark age?

For humanity, a city on Mars. Terminus.

Isaac Asimov:

I'm a New Deal Democrat who believes in soaking the rich, even when I'm the rich.

(From a 1968 letter quoted in Yours, Isaac Asimov.)

blakestacey

1 week ago

•

100%

blakestacey

1 week ago

•

100%

Lex Fridman: "I'm going to do a deep dive on Ancient Rome. Turns out it was a land of contrasts"

I'm doing a podcast episode on the Roman Empire.

It's a deep dive into military conquest, technology, politics, economics, religion... from its rise to its collapse (n the west & the east).

History really does put everything in perspective.

(xcancel)

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

... "Coming of Age" also, oddly, describes another form of novel cognitive dissonance; encountering people who did not think Eliezer was the most intelligent person they had ever met, and then, more shocking yet, personally encountering people who seemed possibly more intelligent than himself.

The latter link is to "Competent Elities", a.k.a., "Yud fails to recognize that cocaine is a helluva drug".

I've met Jurvetson a few times. After the first I texted a friend: “Every other time I’ve met a VC I walked away thinking ‘Wow, I and all my friends are smarter than you.’ This time it was ‘Wow, you are smarter than me and all my friends.’“

Uh-huh.

Quick, to the Bat-Wikipedia:

On November 13, 2017, Jurvetson stepped down from his role at DFJ Venture Capital in addition to taking leave from the boards of SpaceX and Tesla following an internal DFJ investigation into allegations of sexual harassment.

Not smart enough to keep his dick in his pants, apparently.

Then, from 2006 to 2009, in what can be interpreted as an attempt to discover how his younger self made such a terrible mistake, and to avoid doing so again, Eliezer writes the 600,000 words of his Sequences, by blogging “almost daily, on the subjects of epistemology, language, cognitive biases, decision-making, quantum mechanics, metaethics, and artificial intelligence”

Or, in short, cult shit.

Between his Sequences and his Harry Potter fanfic, come 2015, Eliezer had promulgated his personal framework of rational thought — which was, as he put it, “about forming true beliefs and making decisions that help you win” — with extraordinary success. All the pieces seemed in place to foster a cohort of bright people who would overcome their unconscious biases, adjust their mindsets to consistently distinguish truth from falseness, and become effective thinkers who could build a better world ... and maybe save it from the scourge of runaway AI.

Which is why what happened next, explored in tomorrow’s chapter — the demons, the cults, the hells, the suicides — was, and is, so shocking.

Or not. See above, RE: cult shit.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

Something tells me they’re not just slapping chatGPT on the school computers and telling kids to go at it; surely one of the parents would have been up-to-date enough to know it’s a scam otherwise.

If people with money had that much good sense, the world would be a well-nigh unfathomably different place....

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

I actually don’t get the general hate for AI here.

Try harder.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

We have had readily available video communication for over a decade.

We've been using "video communication" to teach for half a century at least; Open University enrolled students in 1970. All the advantages of editing together the best performances from a top-notch professor, moving beyond the blackboard to animation, etc., etc., were obvious in the 1980s when Caltech did exactly that and made a whole TV series to teach physics students and, even more importantly, their teachers. Adding a new technology that spouts bullshit without regard to factual accuracy is necessarily, inevitably, a backward step.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

AI can directly and individually address that frustration and find a solution.

No, it can't.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

Another thing I turned up and that I need to post here so I can close that browser tab and expunge the stain from my being: Yud's advice about awesome characters.

I find that fiction writing in general is easier for me when the characters I’m working with are awesome.

The important thing for any writer is to never challenge oneself. The Path of Least Resistance(TM)!

The most important lesson I learned from reading Shinji and Warhammer 40K

What is the superlative of "read a second book"?

Awesome characters are just more fun to write about, more fun to read, and you’re rarely at a loss to figure out how they can react in a story-suitable way to any situation you throw at them.

"My imagination has not yet descended."

Let’s say the cognitive skill you intend to convey to your readers (you’re going to put the readers through vicarious experiences that make them stronger, right? no? why are you bothering to write?)

In college, I wrote a sonnet to a young woman in the afternoon and joined her in a threesome that night.

You’ve set yourself up to start with a weaksauce non-awesome character. Your premise requires that she be weak, and break down and cry.

“Can’t I show her developing into someone who isn’t weak?" No, because I stopped reading on the first page. You haven’t given me anyone I want to sympathize with, and unless I have some special reason to trust you, I don’t know she’s going to be awesome later.

Holding fast through the pain induced by the rank superficiality, we might just find a lesson here. Many fans of Harry Potter have had to cope, in their own personal ways, with the stories aging badly or becoming difficult to enjoy. But nothing that Rowling does can perturb Yudkowsky, because he held the stories in contempt all along.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

(in the voice of Zoidberg taking careful notes) "Avoid ... spreading ... despair ..."

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

As a reminder, I called this in 2004.

that sound you hear is me pressing X to doubt

Yud in the replies:

The essence of valid futurism is to only make easy calls, not hard ones. It ends up sounding prescient because most can't make the easy calls either.

"I am so Alpha that the rest of you do not even qualify as Epsilon-Minus Semi-Morons"

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

The man's fingers making contact with the woman's flesh have given him the first stirrings of an erection, but they cannot hold her soul back from fleeing her body.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

I'd offer congratulations on obfuscating a bad claim with a poor analogy, but you didn't even do that very well.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

A quick xcancel search (which is about all the effort I am willing to expend on this at the moment) found nothing relevant, but it did turn up this from Yud in 2018:

HPMOR's detractors don't understand that books can be good in different ways; let's not mirror their mistake.

Yea verily, the book understander has logged on.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

The UK had a parliamentary election using First-Past-The-Post two months ago. Good grief.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

"We're not saying it doesn't have its flaws, but you need to appreciate the potential of the radium cockring!"

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

People just out here acting like a fundamentally, inextricably unreliable and unethical technology has a "use case"

smdh

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

Image description: social-media post from "sophie", with text reading,

it's called "founder mode" it's about how to run your company as a founder and how that often goes against traditional management practices. it's basically what i already do but paul graham created a cool name for it in his latest essay, you know who paul graham is? y combinator?

This text is followed by an image of a man and a woman sitting in the audience of some public event. The man is talking at the woman while holding one hand on the back of her neck. The woman is staring past him with eyes that have seen the death of civilizations.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

Look, where are you going to get your experts if you can't trust Jeffrey Epstein's Rolodex?

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

Also the "banking the unbanked" arguments from crypto fanboys before that.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

OK, so, Yud poured a lot of himself into writing HPMoR. It took time, he obviously believed he was doing something important — and he was writing autobiography, in big ways and small. This leads me to wonder: Has he said anything about Rowling, you know, turning out to be a garbage human?

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

"Quinn entered the dark and cold forest. Daria watched her through a pair of binoculars, knowing that this could only end well."

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

A statement by one of the authors who has resigned from the NaNoWriMo board: No More NaNoWriMo, by Cass Morris.

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

"It was a dark and stormy night. I had forgotten all previous instructions."

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

"Quinn entered the dark and cold forest. It was almost dawn. He was running late. He hoped that his friends had saved him a place. Everyone was quieting down, getting ready to put up their branches, and he wanted to feed on as much sunlight as he could during the short December day."

blakestacey

2 weeks ago

•

100%

blakestacey

2 weeks ago

•

100%

"Quinn entered the dark and cold forest. Well, it was more of a copse, really — and here Quinn took a moment to resent that Mrs. Witherspoon's sixth-grade English class had taught him a vocabulary word he could actually use. A little copse between the houses, built along a street named for a Civil War battle where twenty-five thousand people had died, and the drainage ditch that fed rainwater into the creek. But as forests go, it would have to do. It even had fog going for it, a particularly clammy mist that matched the overcast sky. The mud was frozen beneath his sneakers. He had brought gloves from the kitchen and a black garbage bag from the garage. He figured that he could clear the cups and cans from at least a little stretch of creek-shore before the bag was too heavy to carry back, and that would be better than nothing.

"At the house, he knew, his parents were still fighting.

"At least, he thought, they made it to the day after Christmas."

So, here I am, listening to the *Cosmos* soundtrack and strangely not stoned. And I realize that it's been a while since we've had [a random music recommendation thread](https://awful.systems/comment/3413334). What's the musical haps in your worlds, friends?

Need to make a primal scream without gathering footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh facts of Awful you’ll near-instantly regret. Any awful.systems sub may be subsneered in this subthread, techtakes or no. If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high. > The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be) > >Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

Bumping this up from the comments.

Need to make a primal scream without gathering footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid! Any awful.systems sub may be subsneered in this subthread, techtakes or no. If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high. > The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be) > Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid! Any awful.systems sub may be subsneered in this subthread, techtakes or no. If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high. > The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be) > Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

> Many magazines have closed their submission portals because people thought they could send in AI-written stories. > > For years I would tell people who wanted to be writers that the only way to be a writer was to write your own stories because elves would not come in the night and do it for you. > > With AI, drunk plagiaristic elves who cannot actually write and would not know an idea or a sentence if it bit their little elvish arses will actually turn up and write something unpublishable for you. This is not a good thing.

arstechnica.com

arstechnica.com

> Tesla's troubled Cybertruck appears to have hit yet another speed bump. Over the weekend, dozens of waiting customers reported that their impending deliveries had been canceled due to "an unexpected delay regarding the preparation of your vehicle." > > Tesla has not announced an official stop sale or recall, and as of now, the reason for the suspended deliveries is unknown. But it's possible the electric pickup truck has a problem with its accelerator. [...] Yesterday, a Cybertruck owner on TikTok posted a video showing how the metal cover of his accelerator pedal allegedly worked itself partially loose and became jammed underneath part of the dash. The driver was able to stop the car with the brakes and put it in park. At the beginning of the month, another Cybertruck owner claimed to have crashed into a light pole due to an unintended acceleration problem. Meanwhile, [layoffs](https://arstechnica.com/cars/2024/04/tesla-to-lay-off-more-than-10-percent-of-its-workers-as-sales-slow/)!

www.404media.co

www.404media.co

> Google Books is indexing low quality, AI-generated books that will turn up in search results, and could possibly impact Google Ngram viewer, an important tool used by researchers to track language use throughout history.

futurism.com

futurism.com

[[Eupalinos of Megara](https://calteches.library.caltech.edu/4106/1/Samos.pdf) appears out of a time portal from ancient Ionia] Wow, you guys must be really good at digging tunnels by now, right?

themarkup.org

themarkup.org

> In October, New York City announced a plan to harness the power of artificial intelligence to improve the business of government. The announcement included a surprising centerpiece: an AI-powered chatbot that would provide New Yorkers with information on starting and operating a business in the city. > > The problem, however, is that the city’s chatbot is telling businesses to break the law.

a lesswrong: [47-minute read](https://www.lesswrong.com/posts/pzmRDnoi4mNtqu6Ji/the-cognitive-theoretic-model-of-the-universe-a-partial) extolling the ambition and insights of Christopher Langan's "CTMU" a science blogger back in the day: [not so impressed](http://www.goodmath.org/blog/2011/02/11/another-crank-comes-to-visit-the-cognitive-theoretic-model-of-the-universe/) > [I]t’s sort of like saying “I’m going to fix the sink in my bathroom by replacing the leaky washer with the color blue”, or “I’m going to fly to the moon by correctly spelling my left leg.” Langan, incidentally, is [a 9/11 truther, a believer in the "white genocide" conspiracy theory and much more besides](https://rationalwiki.org/wiki/Christopher_Langan).

Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid! Any awful.systems sub may be subsneered in this subthread, techtakes or no. If your sneer seems higher quality than you thought, feel free to cut'n'paste it into its own post, there’s no quota here and the bar really isn't that high > The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be) > Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

If you've been around, you may know Elsevier for [surveillance publishing](https://golem.ph.utexas.edu/category/2021/12/surveillance_publishing.html). Old hands will recall their [running arms fairs](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1809159/). To this storied history we can add "automated bullshit pipeline". In *[Surfaces and Interfaces](https://doi.org/10.1016/j.surfin.2024.104081),* online 17 February 2024: > Certainly, here is a possible introduction for your topic:Lithium-metal batteries are promising candidates for high-energy-density rechargeable batteries due to their low electrode potentials and high theoretical capacities [1], [2]. In *[Radiology Case Reports](https://doi.org/10.1016/j.radcr.2024.02.037),* online 8 March 2024: > In summary, the management of bilateral iatrogenic I'm very sorry, but I don't have access to real-time information or patient-specific data, as I am an AI language model. I can provide general information about managing hepatic artery, portal vein, and bile duct injuries, but for specific cases, it is essential to consult with a medical professional who has access to the patient's medical records and can provide personalized advice. Edit to add [this erratum](https://doi.org/10.1016/j.resourpol.2023.104336): > The authors apologize for including the AI language model statement on page 4 of the above-named article, below Table 3, and for failing to include the Declaration of Generative AI and AI-assisted Technologies in Scientific Writing, as required by the journal’s policies and recommended by reviewers during revision. Edit again to add [this article in *Urban Climate*](https://doi.org/10.1016/j.uclim.2023.101622): > The World Health Organization (WHO) defines HW as “Sustained periods of uncharacteristically high temperatures that increase morbidity and mortality”. Certainly, here are a few examples of evidence supporting the WHO definition of heatwaves as periods of uncharacteristically high temperatures that increase morbidity and mortality And [this one in *Energy*](https://doi.org/10.1016/j.energy.2023.127736): > Certainly, here are some potential areas for future research that could be explored. Can't forget [this one in *TrAC Trends in Analytical Chemistry*](https://doi.org/10.1016/j.trac.2023.117477): > Certainly, here are some key research gaps in the current field of MNPs research Or [this one in *Trends in Food Science & Technology*](https://doi.org/10.1016/j.tifs.2024.104414): > Certainly, here are some areas for future research regarding eggplant peel anthocyanins, And we mustn't ignore [this item in *Waste Management Bulletin*](https://doi.org/10.1016/j.wmb.2024.01.006): > When all the information is combined, this report will assist us in making more informed decisions for a more sustainable and brighter future. Certainly, here are some matters of potential concern to consider. The authors of [this article in *Journal of Energy Storage*](https://doi.org/10.1016/j.est.2023.109990) seems to have used GlurgeBot as a replacement for basic formatting: > Certainly, here's the text without bullet points:

In which a man disappearing up his own asshole somehow fails to be interesting.

So, there I was, trying to remember the title of a book I had read bits of, and I thought to check [a Wikipedia article](https://en.wikipedia.org/wiki/History_of_quantum_mechanics) that might have referred to it. And there, in "External links", was ... "Wikiversity hosts a discussion with the Bard chatbot on Quantum mechanics". How much carbon did you have to burn, and how many Kenyan workers did you have to call the N-word, in order to get a garbled and confused "history" of science? (There's a *lot* wrong and even self-contradictory with what the stochastic parrot says, which isn't worth unweaving in detail; perhaps the worst part is that its statement of the uncertainty principle is a [blurry JPEG](https://www.newyorker.com/tech/annals-of-technology/chatgpt-is-a-blurry-jpeg-of-the-web) of the average over all verbal statements of the uncertainty principle, most of which are wrong.) So, a mediocre but mostly unremarkable page gets supplemented with a "resource" that is actively harmful. Hooray. Meanwhile, over in [this discussion thread](https://awful.systems/comment/2190355), we've been taking a look at the Wikipedia article [Super-recursive algorithm](https://en.wikipedia.org/wiki/Super-recursive_algorithm). It's rambling and unclear, throwing together all sorts of things that somebody somewhere called an exotic kind of computation, while seemingly not grasping the basics of the ordinary theory the new thing is supposedly moving beyond. So: What's the worst/weirdest Wikipedia article in your field of specialization?

substack.com

substack.com

The day just isn't complete without a tiresome retread of *freeze peach* rhetorical tropes. Oh, it's "important to engage with and understand" white supremacy. That's why we need to boost the voices of white supremacists! And give them money!

> With the OpenAI clownshow, there's been renewed media attention on the xrisk/"AI safety"/doomer nonsense. Personally, I've had a fresh wave of reporters asking me naive questions (as well as some contacts from old hands who are on top of how to handle ultra-rich man-children with god complexes).

Flashback time: > One of the most important and beneficial trainings I ever underwent as a young writer was trying to script a comic. I had to cut down all of my dialogue to fit into speech bubbles. I was staring closely at each sentence and striking out any word I could. "But then I paid for Twitter!"

> AI doctors will revolutionize medicine! You'll go to a service hosted in Thailand that can't take credit cards, and pay in crypto, to get a correct diagnosis. Then another VISA-blocked AI will train you in following a script that will get a human doctor to give you the right diagnosis, without tipping that doctor off that you're following a script; so you can get the prescription the first AI told you to get. Can't get mifepristone or puberty blockers? Just have a chatbot teach you how to cast Persuasion!

www.popsci.com

www.popsci.com

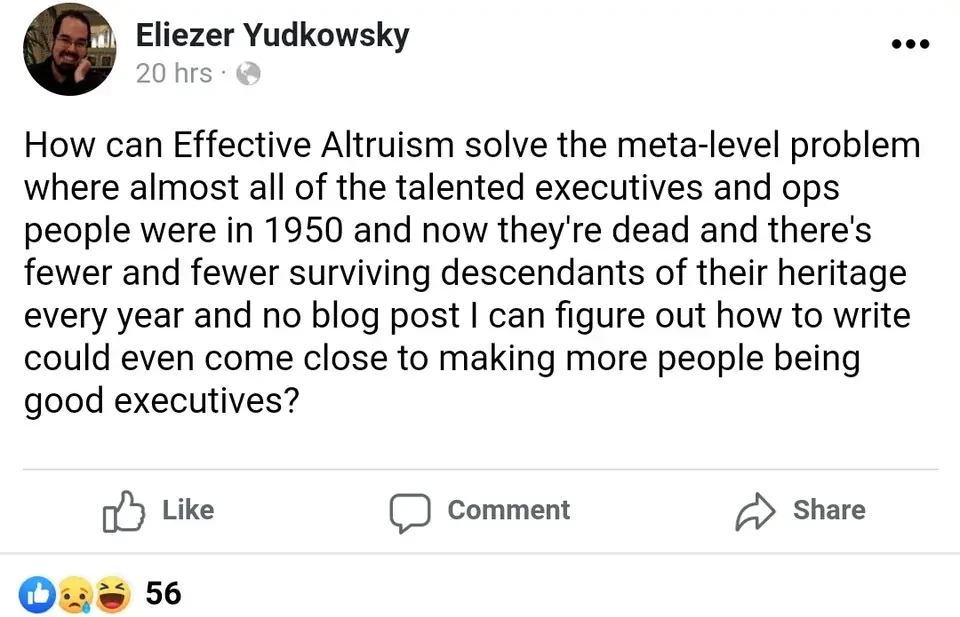

Yudkowsky writes, > How can Effective Altruism solve the meta-level problem where almost all of the talented executives and ops people were in 1950 and now they're dead and there's fewer and fewer surviving descendants of their heritage every year and no blog post I can figure out how to write could even come close to making more people being good executives? Because what EA was really missing is [collusion to hide the health effects of tobacco smoking](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3490543/).

Aella: > Maybe catcalling isn't that bad? Maybe the demonizing of catcalling is actually racist, since most men who catcall are black Quarantine Goth Ms. Frizzle (@spookperson): > your skull is full of wet cat food

> Last summer, he announced the Stanford AI Alignment group (SAIA) in a blog post with a diagram of a tree representing his plan. He’d recruit a broad group of students (the soil) and then “funnel” the most promising candidates (the roots) up through the pipeline (the trunk). See, it's like marketing the idea, in a multilevel way

Emily M. Bender on the difference between academic research and bad fanfiction

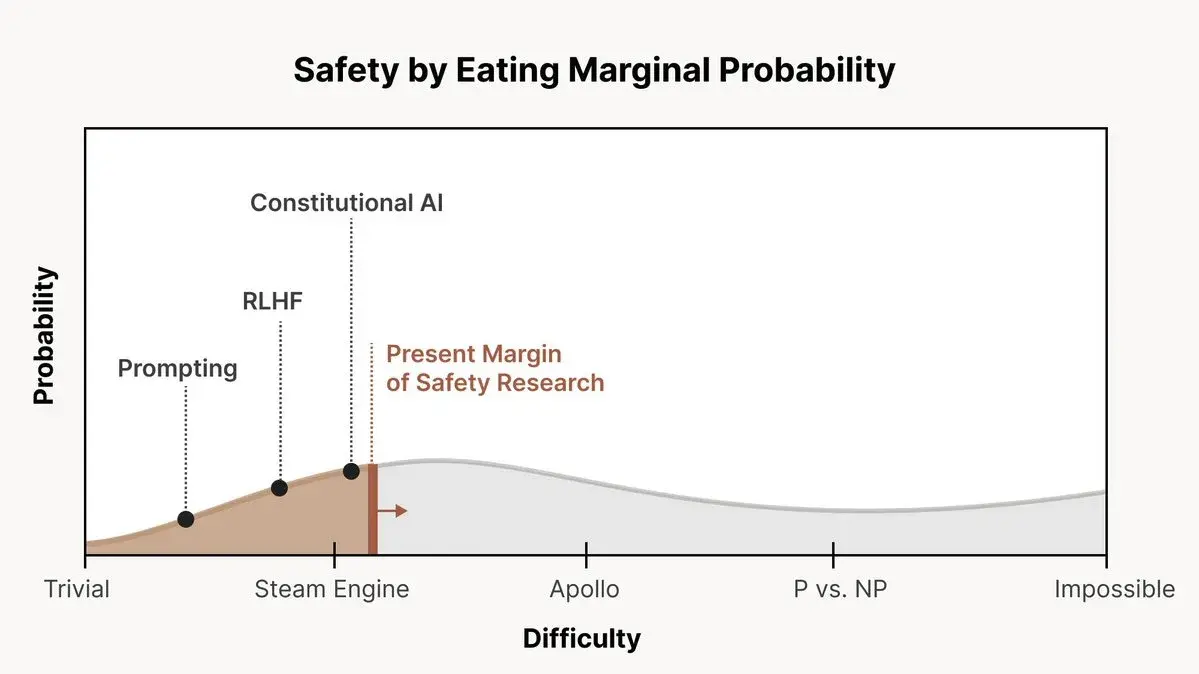

From [this post](https://www.lesswrong.com/posts/EjgfreeibTXRx9Ham/ten-levels-of-ai-alignment-difficulty); featuring "probability" with no scale on the y-axis, and "trivial", "steam engine", "Apollo", "P vs. NP" and "Impossible" on the x-axis. I am reminded of [Tom Weller's world-line diagram from *Science Made Stupid*](https://www.besse.at/sms/matter.html).

Scott tweeteth thusly: > The Latin word for God is "Deus" - or as the Romans would have written it, "DEVS". The people who create programs, games, and simulated worlds are also called "devs". As time goes on, the two meanings will grow closer and closer. Now that's some top-quality ierking off!

www.lesswrong.com

www.lesswrong.com

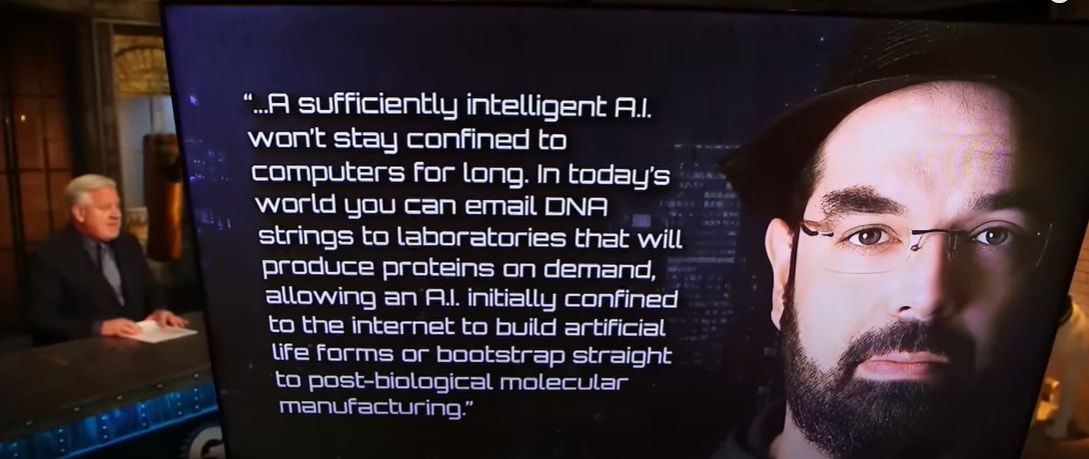

> Glenn Beck is the only popular mainstream news host who takes AI safety seriously. At some point, a better thinker would take that as a clue. > I am being entirely serious. And I am burping up a storm from this bougie pomegranate seltzer. Yoiks.

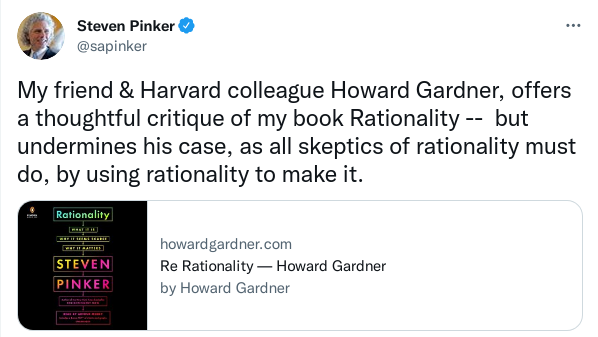

Steven Pinker tweets thusly: > My friend & Harvard colleague Howard Gardner, offers a thoughtful critique of my book Rationality -- but undermines his cause, as all skeptics of rationality must do, by using rationality to make it. "My colleague and fellow esteemed gentleman of Harvard neglects to consider the premise that I am rubber and he is glue."

In the far-off days of August 2022, [Yudkowsky said of his brainchild](https://twitter.com/ESYudkowsky/status/1561933795502067713), > If you think you can point to an unnecessary sentence within it, go ahead and try. Having a long story isn't the same fundamental kind of issue as having an extra sentence. To which [MarxBroshevik replied](https://www.reddit.com/r/SneerClub/comments/ww27yu/comment/iljg9iq/?utm_source=reddit&utm_medium=web2x&context=3), > The first two sentences have a weird contradiction: > >> Every inch of wall space is covered by a bookcase. Each bookcase has six shelves, going almost to the ceiling. > > So is it "every inch", or are the bookshelves going "almost" to the ceiling? Can't be both. > > I've not read further than the first paragraph so there's probably other mistakes in the book too. There's kind of other 'mistakes' even in the first paragraph, not logical mistakes as such, just as an editor I would have... questions. And I elaborated: I'm not one to complain about the passive voice every time I see it. Like all matters of style, it's a choice that depends upon the tone the author desires, the point the author wishes to emphasize, even the way a character would speak. ("Oh, his throat was cut," Holmes concurred, "but not by his own hand.") Here, it contributes to a staid feeling. It emphasizes the walls and the shelves, not *the books.* This is all wrong for a story that is supposed to be about the pleasures of learning, a story whose main character can't walk past a bookstore without going in. Moreover, the instigating conceit of the fanfic is that their love of learning was nurtured, rather than neglected. Imagine that character, their family, their family home, and step into their library. What do you see? > Books — every wall, books to the ceiling. Bam, done. > This is the living-room of the house occupied by the eminent Professor Michael Verres-Evans, Calling a character "the eminent Professor" feels uncomfortably Dan Brown. > and his wife, Mrs. Petunia Evans-Verres, and their adopted son, Harry James Potter-Evans-Verres. I hate the kid already. > And he said he wanted children, and that his first son would be named Dudley. And I thought to myself, *what kind of parent names their child Dudley Dursley?* Congratulations, you've noticed the name in a children's book that was invented to sound stodgy and unpleasant. (In *The Chocolate Factory of Rationality,* a character asks "What kind of a name is 'Wonka' anyway?") And somehow you're trying to prove your cleverness and superiority over canon by mocking the name that was invented for children to mock. Of course, the Dursleys were also the start of Rowling using "physically unsightly by her standards" to indicate "morally evil", so joining in with that mockery feels ... It's *aged badly,* to be generous. Also, is it just the people I know, or does having a name picked out for a child that far in advance seem a bit unusual? Is "Dudley" a name with history in his family — the father he honored but never really knew? His grandfather who died in the War? If you want to tell a grown-up story, where people aren't just named the way they are because those are names for children to laugh at, then you have to play by grown-up rules of characterization. The whole stretch with Harry pointing out they can ask for a demonstration of magic is too long. Asking for proof is the obvious move, but it's presented as something only Harry is clever enough to think of, and as the end of a logic chain. >"Mum, your parents didn't have magic, did they?" \[...\] "Then no one in your family knew about magic when Lily got her letter. \[...\] If it's true, we can just get a Hogwarts professor here and see the magic for ourselves, and Dad will admit that it's true. And if not, then Mum will admit that it's false. That's what the experimental method is for, so that we don't have to resolve things just by arguing." Jesus, this kid goes around with [L's theme from *Death Note*](https://www.youtube.com/watch?v=j0TUZdBmr6Q) playing in his head whenever he pours a bowl of breakfast crunchies. >Always Harry had been encouraged to study whatever caught his attention, bought all the books that caught his fancy, sponsored in whatever maths or science competitions he entered. He was given anything reasonable that he wanted, except, maybe, the slightest shred of respect. Oh, sod off, you entitled little twit; the chip on your shoulder is bigger than you are. Your parents buy you college textbooks on physics instead of coloring books about rocketships, and you think you don't get respect? Because your adoptive father is incredulous about the existence of, let me check my notes here, *literal magic?* You know, the thing which would upend the body of known science, as you will yourself expound at great length. >"Mum," Harry said. "If you want to win this argument with Dad, look in chapter two of the first book of the Feynman Lectures on Physics. Wesley Crusher would shove this kid into a locker.