Sidebar

Oobabooga Text Generation

It's been a while since I checked the latest, I'm sure there's been tons released but I have no idea what's good now.

Could someone recommend a LLM for the Nvidia GTX1080? I've used the gptq_model-4bit-128g of Luna AI from the Bloke and i get a response every 30s-60s and only 4-5 prompts before it starts to repeat or hallucinate.

cross-posted from: https://lemmy.world/post/2219010 > Hello everyone! > > We have officially hit 1,000 subscribers! How exciting!! Thank you for being a member of !fosai@lemmy.world. Whether you're a casual passerby, a hobby technologist, or an up-and-coming AI developer - I sincerely appreciate your interest and support in a future that is free and open for all. > > It can be hard to keep up with the rapid developments in AI, so I have decided to pin this at the top of our community to be a frequently updated LLM-specific resource hub and model index for all of your adventures in FOSAI. > > The ultimate goal of this guide is to become a gateway resource for anyone looking to get into free open-source AI (particularly text-based large language models). I will be doing a similar guide for image-based diffusion models soon! > > In the meantime, I hope you find what you're looking for! Let me know in the comments if there is something I missed so that I can add it to the guide for everyone else to see. > > --- > > ## **Getting Started With Free Open-Source AI** > > Have no idea where to begin with AI / LLMs? Try starting with our [Lemmy Crash Course for Free Open-Source AI](https://lemmy.world/post/76020). > > When you're ready to explore more resources see our [FOSAI Nexus](https://lemmy.world/post/814816) - a hub for all of the major FOSS & FOSAI on the cutting/bleeding edges of technology. > > If you're looking to jump right in, I recommend downloading [oobabooga's text-generation-webui](https://github.com/oobabooga/text-generation-webui) and installing one of the LLMs from [TheBloke](https://huggingface.co/TheBloke) below. > > Try both GGML and GPTQ variants to see which model type performs to your preference. See the hardware table to get a better idea on which parameter size you might be able to run (3B, 7B, 13B, 30B, 70B). > > ### **8-bit System Requirements** > > | Model | VRAM Used | Minimum Total VRAM | Card Examples | RAM/Swap to Load* | > |-----------|-----------|--------------------|-------------------|-------------------| > | LLaMA-7B | 9.2GB | 10GB | 3060 12GB, 3080 10GB | 24 GB | > | LLaMA-13B | 16.3GB | 20GB | 3090, 3090 Ti, 4090 | 32 GB | > | LLaMA-30B | 36GB | 40GB | A6000 48GB, A100 40GB | 64 GB | > | LLaMA-65B | 74GB | 80GB | A100 80GB | 128 GB | > > ### **4-bit System Requirements** > > | Model | Minimum Total VRAM | Card Examples | RAM/Swap to Load* | > |-----------|--------------------|--------------------------------|-------------------| > | LLaMA-7B | 6GB | GTX 1660, 2060, AMD 5700 XT, RTX 3050, 3060 | 6 GB | > | LLaMA-13B | 10GB | AMD 6900 XT, RTX 2060 12GB, 3060 12GB, 3080, A2000 | 12 GB | > | LLaMA-30B | 20GB | RTX 3080 20GB, A4500, A5000, 3090, 4090, 6000, Tesla V100 | 32 GB | > | LLaMA-65B | 40GB | A100 40GB, 2x3090, 2x4090, A40, RTX A6000, 8000 | 64 GB | > > *System RAM (not VRAM), is utilized to initially load a model. You can use swap space if you do not have enough RAM to support your LLM. > > When in doubt, try starting with 3B or 7B models and work your way up to 13B+. > > ### **FOSAI Resources** > > **Fediverse / FOSAI** > - [The Internet is Healing](https://www.youtube.com/watch?v=TrNE2fSCeFo) > - [FOSAI Welcome Message](https://lemmy.world/post/67758) > - [FOSAI Crash Course](https://lemmy.world/post/76020) > - [FOSAI Nexus Resource Hub](https://lemmy.world/post/814816) > > **LLM Leaderboards** > - [HF Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard) > - [LMSYS Chatbot Arena](https://chat.lmsys.org/?leaderboard) > > **LLM Search Tools** > - [LLM Explorer](https://llm.extractum.io/) > - [Open LLMs](https://github.com/eugeneyan/open-llms) > > --- > > ## **Large Language Model Hub** > > [Download Models](https://huggingface.co/TheBloke) > > ### [oobabooga](https://github.com/oobabooga/text-generation-webui) > text-generation-webui - a big community favorite gradio web UI by oobabooga designed for running almost any free open-source and large language models downloaded off of [HuggingFace](https://huggingface.co/TheBloke) which can be (but not limited to) models like LLaMA, llama.cpp, GPT-J, Pythia, OPT, and many others. Its goal is to become the [AUTOMATIC1111/stable-diffusion-webui](https://github.com/AUTOMATIC1111/stable-diffusion-webui) of text generation. It is highly compatible with many formats. > > ### [Exllama](https://github.com/turboderp/exllama) > A standalone Python/C++/CUDA implementation of Llama for use with 4-bit GPTQ weights, designed to be fast and memory-efficient on modern GPUs. > > ### [gpt4all](https://github.com/nomic-ai/gpt4all) > Open-source assistant-style large language models that run locally on your CPU. GPT4All is an ecosystem to train and deploy powerful and customized large language models that run locally on consumer-grade processors. > > ### [TavernAI](https://github.com/TavernAI/TavernAI) > The original branch of software SillyTavern was forked from. This chat interface offers very similar functionalities but has less cross-client compatibilities with other chat and API interfaces (compared to SillyTavern). > > ### [SillyTavern](https://github.com/SillyTavern/SillyTavern) > Developer-friendly, Multi-API (KoboldAI/CPP, Horde, NovelAI, Ooba, OpenAI+proxies, Poe, WindowAI(Claude!)), Horde SD, System TTS, WorldInfo (lorebooks), customizable UI, auto-translate, and more prompt options than you'd ever want or need. Optional Extras server for more SD/TTS options + ChromaDB/Summarize. Based on a fork of TavernAI 1.2.8 > > ### [Koboldcpp](https://github.com/LostRuins/koboldcpp) > A self contained distributable from Concedo that exposes llama.cpp function bindings, allowing it to be used via a simulated Kobold API endpoint. What does it mean? You get llama.cpp with a fancy UI, persistent stories, editing tools, save formats, memory, world info, author's note, characters, scenarios and everything Kobold and Kobold Lite have to offer. In a tiny package around 20 MB in size, excluding model weights. > > ### [KoboldAI-Client](https://github.com/KoboldAI/KoboldAI-Client) > This is a browser-based front-end for AI-assisted writing with multiple local & remote AI models. It offers the standard array of tools, including Memory, Author's Note, World Info, Save & Load, adjustable AI settings, formatting options, and the ability to import existing AI Dungeon adventures. You can also turn on Adventure mode and play the game like AI Dungeon Unleashed. > > ### [h2oGPT](https://github.com/h2oai/h2ogpt) > h2oGPT is a large language model (LLM) fine-tuning framework and chatbot UI with document(s) question-answer capabilities. Documents help to ground LLMs against hallucinations by providing them context relevant to the instruction. h2oGPT is fully permissive Apache V2 open-source project for 100% private and secure use of LLMs and document embeddings for document question-answer. > > --- > > ## **Models** > > ### The Bloke > The Bloke is a developer who frequently releases quantized (GPTQ) and optimized (GGML) open-source, user-friendly versions of AI Large Language Models (LLMs). > > These conversions of popular models can be configured and installed on personal (or professional) hardware, bringing bleeding-edge AI to the comfort of your home. > > Support [TheBloke](https://huggingface.co/TheBloke) here. > > - [https://ko-fi.com/TheBlokeAI](https://ko-fi.com/TheBlokeAI) > > --- > > #### 70B > - [Llama-2-70B-chat-GPTQ](https://huggingface.co/TheBloke/Llama-2-70B-chat-GPTQ) > - [Llama-2-70B-Chat-GGML](https://huggingface.co/TheBloke/Llama-2-70B-Chat-GGML) > > - [Llama-2-70B-GPTQ](https://huggingface.co/TheBloke/Llama-2-70B-GPTQ) > - [Llama-2-70B-GGML](https://huggingface.co/TheBloke/Llama-2-70B-GGML) > > - [llama-2-70b-Guanaco-QLoRA-GPTQ](https://huggingface.co/TheBloke/llama-2-70b-Guanaco-QLoRA-GPTQ) > > --- > > #### 30B > - [30B-Epsilon-GPTQ](https://huggingface.co/TheBloke/30B-Epsilon-GPTQ) > > --- > > #### 13B > - [Llama-2-13B-chat-GPTQ](https://huggingface.co/TheBloke/Llama-2-13B-chat-GPTQ) > - [Llama-2-13B-chat-GGML](https://huggingface.co/TheBloke/Llama-2-13B-chat-GGML) > > - [Llama-2-13B-GPTQ](https://huggingface.co/TheBloke/Llama-2-13B-GPTQ) > - [Llama-2-13B-GGML](https://huggingface.co/TheBloke/Llama-2-13B-GGML) > > - [llama-2-13B-German-Assistant-v2-GPTQ](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GPTQ) > - [llama-2-13B-German-Assistant-v2-GGML](https://huggingface.co/TheBloke/llama-2-13B-German-Assistant-v2-GGML) > > - [13B-Ouroboros-GGML](https://huggingface.co/TheBloke/13B-Ouroboros-GGML) > - [13B-Ouroboros-GPTQ](https://huggingface.co/TheBloke/13B-Ouroboros-GPTQ) > > - [13B-BlueMethod-GGML](https://huggingface.co/TheBloke/13B-BlueMethod-GGML) > - [13B-BlueMethod-GPTQ](https://huggingface.co/TheBloke/13B-BlueMethod-GPTQ) > > - [llama-2-13B-Guanaco-QLoRA-GGML](https://huggingface.co/TheBloke/llama-2-13B-Guanaco-QLoRA-GGML) > - [llama-2-13B-Guanaco-QLoRA-GPTQ](https://huggingface.co/TheBloke/llama-2-13B-Guanaco-QLoRA-GPTQ) > > - [Dolphin-Llama-13B-GGML](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGML) > - [Dolphin-Llama-13B-GPTQ](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GPTQ) > > - [MythoLogic-13B-GGML](https://huggingface.co/TheBloke/MythoLogic-13B-GGML) > - [MythoBoros-13B-GPTQ](https://huggingface.co/TheBloke/MythoBoros-13B-GPTQ) > > - [WizardLM-13B-V1.2-GPTQ](https://huggingface.co/TheBloke/WizardLM-13B-V1.2-GPTQ) > - [WizardLM-13B-V1.2-GGML](https://huggingface.co/TheBloke/WizardLM-13B-V1.2-GGML) > > - [OpenAssistant-Llama2-13B-Orca-8K-3319-GGML](https://huggingface.co/TheBloke/OpenAssistant-Llama2-13B-Orca-8K-3319-GGML) > > --- > > #### 7B > - [Llama-2-7B-GPTQ](https://huggingface.co/TheBloke/Llama-2-7B-GPTQ) > - [Llama-2-7B-GGML](https://huggingface.co/TheBloke/Llama-2-7B-GGML) > > - [Llama-2-7b-Chat-GPTQ](https://huggingface.co/TheBloke/Llama-2-7b-Chat-GPTQ) > - [LLongMA-2-7B-GPTQ](https://huggingface.co/TheBloke/LLongMA-2-7B-GPTQ) > > - [llama-2-7B-Guanaco-QLoRA-GPTQ](https://huggingface.co/TheBloke/llama-2-7B-Guanaco-QLoRA-GPTQ) > - [llama-2-7B-Guanaco-QLoRA-GGML](https://huggingface.co/TheBloke/llama-2-7B-Guanaco-QLoRA-GGML) > > - [llama2_7b_chat_uncensored-GPTQ](https://huggingface.co/TheBloke/llama2_7b_chat_uncensored-GPTQ) > - [llama2_7b_chat_uncensored-GGML](https://huggingface.co/TheBloke/llama2_7b_chat_uncensored-GGML) > > --- > > ## **More Models** > - [Any of KoboldAI's Models](https://huggingface.co/KoboldAI) > > - [Luna-AI-Llama2-Uncensored-GPTQ](https://huggingface.co/TheBloke/Luna-AI-Llama2-Uncensored-GPTQ) > > - [Nous-Hermes-Llama2-GGML](https://huggingface.co/TheBloke/Nous-Hermes-Llama2-GGML) > - [Nous-Hermes-Llama2-GPTQ](https://huggingface.co/TheBloke/Nous-Hermes-Llama2-GPTQ) > > - [FreeWilly2-GPTQ](https://huggingface.co/TheBloke/FreeWilly2-GPTQ) > > --- > > ## **GL, HF!** > > Are you an LLM Developer? Looking for a shoutout or project showcase? Send me a message and I'd be more than happy to share your work and support links with the community. > > If you haven't already, consider subscribing to the free open-source AI community at !fosai@lemmy.world where I will do my best to make sure you have access to free open-source artificial intelligence on the bleeding edge. > > Thank you for reading!

huggingface.co

huggingface.co

https://huggingface.co/TheBloke Currently testing https://huggingface.co/TheBloke/Llama-2-13B-chat-GPTQ

huggingface.co

huggingface.co

Giving this one a go! https://huggingface.co/TheBloke/Llama-2-13B-chat-GPTQ

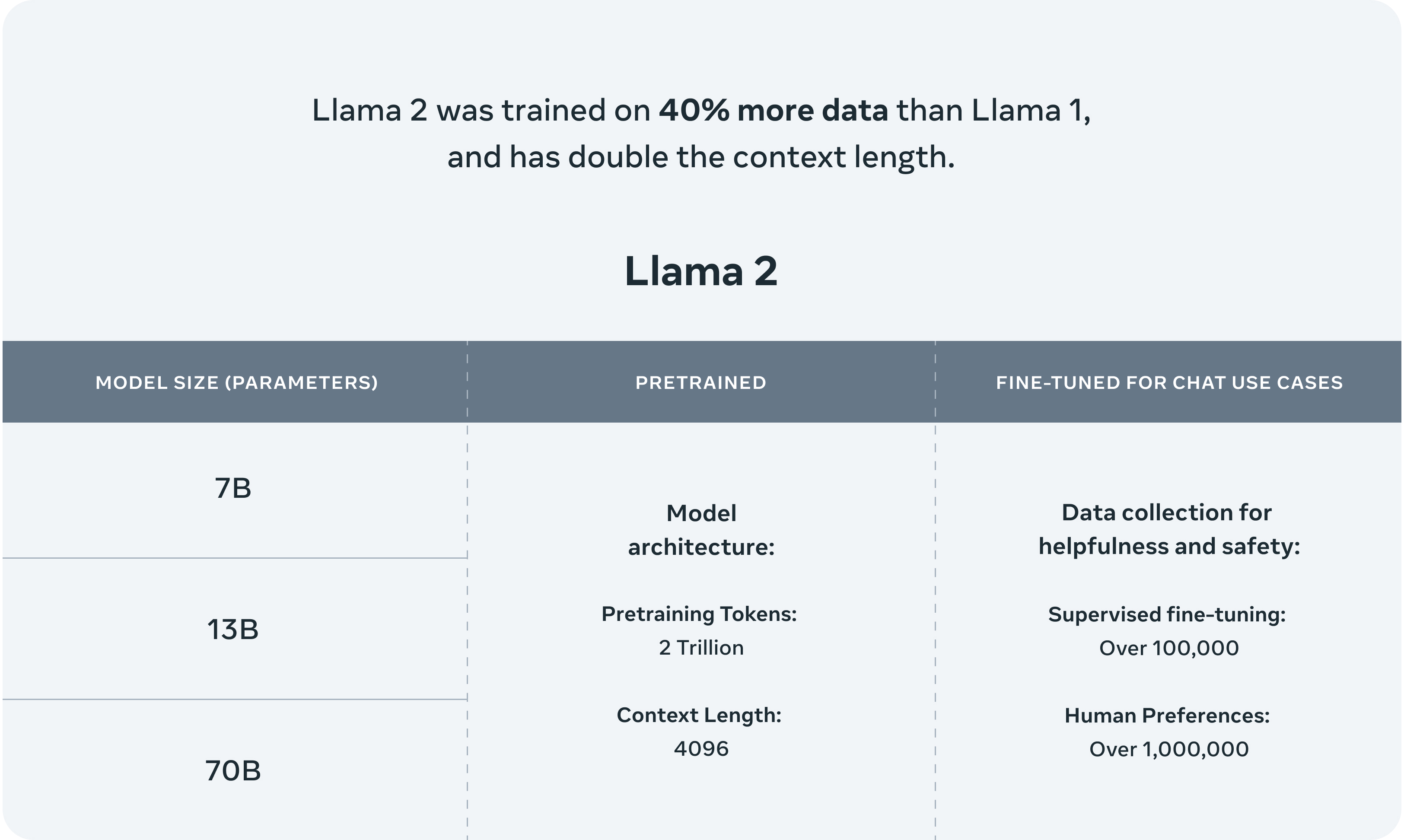

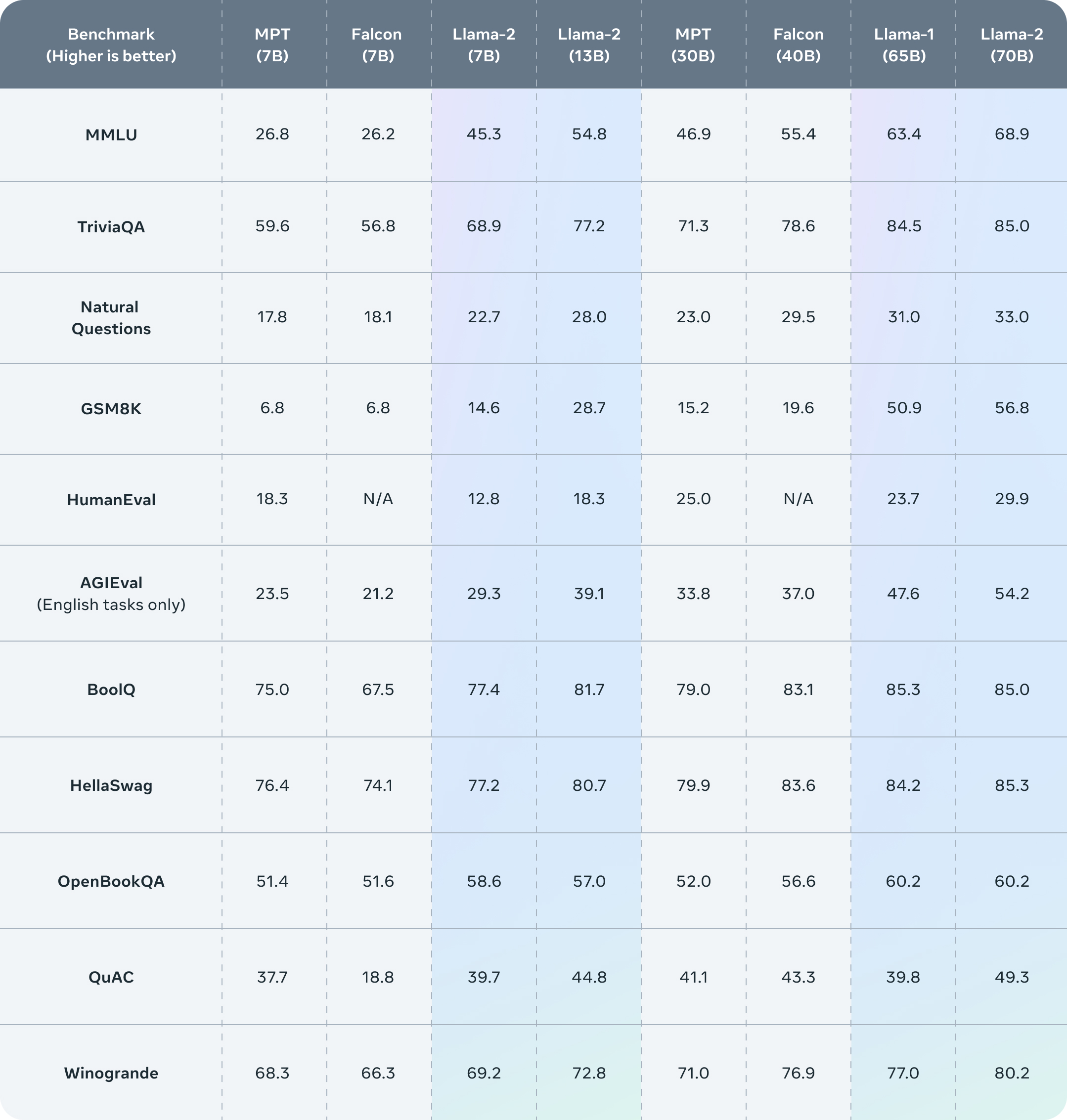

cross-posted from: https://lemmy.world/post/1750098 > ## **[Introducing Llama 2 - Meta's Next Generation Free Open-Source Artificially Intelligent Large Language Model](https://ai.meta.com/llama/)** > >  > > It's incredible it's already here! This is great news for everyone in free open-source artificial intelligence. > > Llama 2 unleashes Meta's (previously) closed model (Llama) to become free open-source AI, accelerating access and development for large language models (LLMs). > > This marks a significant step in machine learning and deep learning technologies. With this move, a widely supported LLM can become a viable choice for businesses, developers, and entrepreneurs to innovate our future using a model that the community has been eagerly awaiting since its [initial leak earlier this year](https://www.theverge.com/2023/3/8/23629362/meta-ai-language-model-llama-leak-online-misuse). > > - [Meta Announcement](https://ai.meta.com/llama/) > - [Meta Overview](https://ai.meta.com/resources/models-and-libraries/llama/) > - [Github](https://github.com/facebookresearch/llama/tree/main) > - [Paper](https://ai.meta.com/research/publications/llama-2-open-foundation-and-fine-tuned-chat-models/) > > **Here are some highlights from the [official Meta AI announcement](https://ai.meta.com/llama/):** > > ## **Llama 2** > > >In this work, we develop and release Llama 2, a collection of pretrained and fine-tuned large language models (LLMs) ranging in scale from 7 billion to 70 billion parameters. Our fine-tuned LLMs, called Llama 2-Chat, are optimized for dialogue use cases. > > > >Our models outperform open-source chat models on most benchmarks we tested, and based on our human evaluations for helpfulness and safety, may be a suitable substitute for closedsource models. We provide a detailed description of our approach to fine-tuning and safety improvements of Llama 2-Chat in order to enable the community to build on our work and contribute to the responsible development of LLMs. > > >Llama 2 pretrained models are trained on 2 trillion tokens, and have double the context length than Llama 1. Its fine-tuned models have been trained on over 1 million human annotations. > > ## **Inside the Model** > > - [Technical details](https://ai.meta.com/resources/models-and-libraries/llama/) > > ### With each model download you'll receive: > > - Model code > - Model Weights > - README (User Guide) > - Responsible Use Guide > - License > - Acceptable Use Policy > - Model Card > > ## **Benchmarks** > > >Llama 2 outperforms other open source language models on many external benchmarks, including reasoning, coding, proficiency, and knowledge tests. It was pretrained on publicly available online data sources. The fine-tuned model, Llama-2-chat, leverages publicly available instruction datasets and over 1 million human annotations. > >  > > ## **RLHF & Training** > > >Llama-2-chat uses reinforcement learning from human feedback to ensure safety and helpfulness. Training Llama-2-chat: Llama 2 is pretrained using publicly available online data. An initial version of Llama-2-chat is then created through the use of supervised fine-tuning. Next, Llama-2-chat is iteratively refined using Reinforcement Learning from Human Feedback (RLHF), which includes rejection sampling and proximal policy optimization (PPO). > >  > > ## **The License** > > >Our model and weights are licensed for both researchers and commercial entities, upholding the principles of openness. Our mission is to empower individuals, and industry through this opportunity, while fostering an environment of discovery and ethical AI advancements. > > >**Partnerships** > > >We have a broad range of supporters around the world who believe in our open approach to today’s AI — companies that have given early feedback and are excited to build with Llama 2, cloud providers that will include the model as part of their offering to customers, researchers committed to doing research with the model, and people across tech, academia, and policy who see the benefits of Llama and an open platform as we do. > > ## **The/CUT** > > With the release of Llama 2, Meta has opened up new possibilities for the development and application of large language models. This free open-source AI not only accelerates access but also allows for greater innovation in the field. > > **Take Three**: > > - **Video Game Analogy**: Just like getting a powerful, rare (or previously banned) item drop in a game, Llama 2's release gives developers a powerful tool they can use and customize for their unique quests in the world of AI. > - **Cooking Analogy**: Imagine if a world-class chef decided to share their secret recipe with everyone. That's Llama 2, a secret recipe now open for all to use, adapt, and improve upon in the kitchen of AI development. > - **Construction Analogy**: Llama 2 is like a top-grade construction tool now available to all builders. It opens up new possibilities for constructing advanced AI structures that were previously hard to achieve. > > ## **Links** > > Here are the key resources discussed in this post: > > - [Meta Announcement](https://ai.meta.com/llama/) > - [Meta Overview](https://ai.meta.com/resources/models-and-libraries/llama/) > - [Github](https://github.com/facebookresearch/llama/tree/main) > - [Paper](https://ai.meta.com/research/publications/llama-2-open-foundation-and-fine-tuned-chat-models/) > - [Technical details](https://ai.meta.com/resources/models-and-libraries/llama/) > > Want to get started with free open-source artificial intelligence, but don't know where to begin? > > Try starting here: > > - [FOSAI Welcome Message](https://lemmy.world/post/67758) > - [FOSAI Crash Course](https://lemmy.world/post/76020) > - [FOSAI Nexus Resource Hub](https://lemmy.world/post/814816) > > If you found anything else about this post interesting - consider subscribing to !fosai@lemmy.world where I do my best to keep you in the know about the most important updates in free open-source artificial intelligence. > > This particular announcement is exciting to me because it may popularize open-source principles and practices for other enterprises and corporations to follow. > > We should see some interesting models emerge out of Llama 2. I for one am looking forward to seeing where this will take us next. Get ready for another wave of innovation! This one is going to be big.

Looking at you, *Bark_TTS*! I tried a few and eventually settled on Adobe Podcast. It's easy to generate whatever audio clips you want, and then you can clean them with Podcast and the free limit is more than enough.

huggingface.co

huggingface.co

Not sure why it took me so long to find this.

github.com

github.com

I just discovered this repo, it looks *really* useful for creating AI voices https://github.com/rsxdalv/tts-generation-webui

huggingface.co

huggingface.co

[Huggingface](https://huggingface.co/TheBloke/Baize-v2-13B-SuperHOT-8K-GPTQ) This has been the best so far, some wierd behaviour sometimes, maybe that's my parameters though. For some characters, this has been the best at keeping them in character and progressing the story!

huggingface.co

huggingface.co

https://huggingface.co/TheBloke contains the latest exLlama SuperHOT 8K context models

Linked original reddit post, but this didn't work for me. I had to take a bunch of extra steps so I've written a tutorial. Original instructions here which I'll refer to, so you don't have to visit reddit. **My revised tutorial with all instructions will follow this in the replies, please post questions as a new post in this community, I've locked this thread so that the tutorial remains easily accessible.** Zyin 24 points 2 months ago* Instructions on how to get this setup if you've never used Jupyter before, like me. I'm not an expert at this, so don't respond asking for technical help. If you've never done stuff that needs Python before, you'll need to install Pip and Git. Google for the download links. If you have Automatic1111 installed already you already have Pip and Git. Install the repo. It will be installed in the folder where you open the cmd window: ` git clone https://github.com/serp-ai/bark-with-voice-clone ` Open a new cmd window in newly downloaded repo's folder (or cd into it) and run it's installation stuff: ` pip install . ` Install Jupyter notebook. It's basically Google Collab, but ran locally: ` pip install jupyterlab (this one may not be needed, I did it anyway) ` ` pip install notebook ` If you are on windows, you'll need these to do audio code stuff with Python: ` pip install soundfile ` ` pip install ipywidgets ` You need to have Torch 2 installed. You can do that with this command (will take a while to download/install): ` pip3 install numpy --pre torch torchvision torchaudio --force-reinstall --index-url https://download.pytorch.org/whl/nightly/cu118 ` To check your current Torch version, open a new cmd window and type these in one at a time: ` python import torch print(torch.__version__) #(mine says 2.1.0.dev20230421+cu118) ` Now everything is installed. Create a folder called "output" in the bark folder, which will be needed later to prevent a permissions error. Run Jupyter Notebook while in the bark folder: ` jupyter notebook ` This will open a new browser tab wit the Jupyter interface. Navigate to /notebooks/generate.ipynb This is very similar to Google Collab where you run blocks of code. Click on the first block of code and click Run. If the code block has a "[*]" next to it, then it is still processing, just give it a minute to finish. This will take a while and download a bunch of stuff. If it manages to finish without errors, run blocks 2 and 3. In block 3, change the line to: filepath = "output/audio.wav" to prevent a permissions related error (remove the leading "/"). You can get different voices by changing the voice_name variable in block 1. Voices are installed at: bark\assets\prompts For reference on my 3060 12GB, it took 90 seconds to generate 13 seconds of audio. The voice models that come out of the box create a robotic sounding voice, not even close to the quality of ElevenLabs. The voice that I created using /notebooks/clone_voice.ipynb with my own voice turned out terrible and was completely unusable, maybe I did something wrong with that, not sure. If you want to test the voice clone using your own voice, and you record a voice sample using windows Voice Recorder, you can convert the .m4a file to .wav with ffmpeg (separate download): ` ffmpeg -i "C:\Users\USER\Documents\Sound recordings\Recording.m4a" "C:\path\to\bark-with-voice-clone\ ` **___**

really good tips here!

www.youtube.com

www.youtube.com

Tested it myself, huge improvement!

I'll try post as much good content here as possible to get it started